How Can We Help?

S3cmd Bucket Backup Script Documentation

In this documentation, we provide all installation and configuration instructions in order to use our S3cmd Bucket Backup Script for DigitalOcean Spaces & Amazon S3. If you haven’t acquired this plugin yet, please also visit the plugin’s download site.

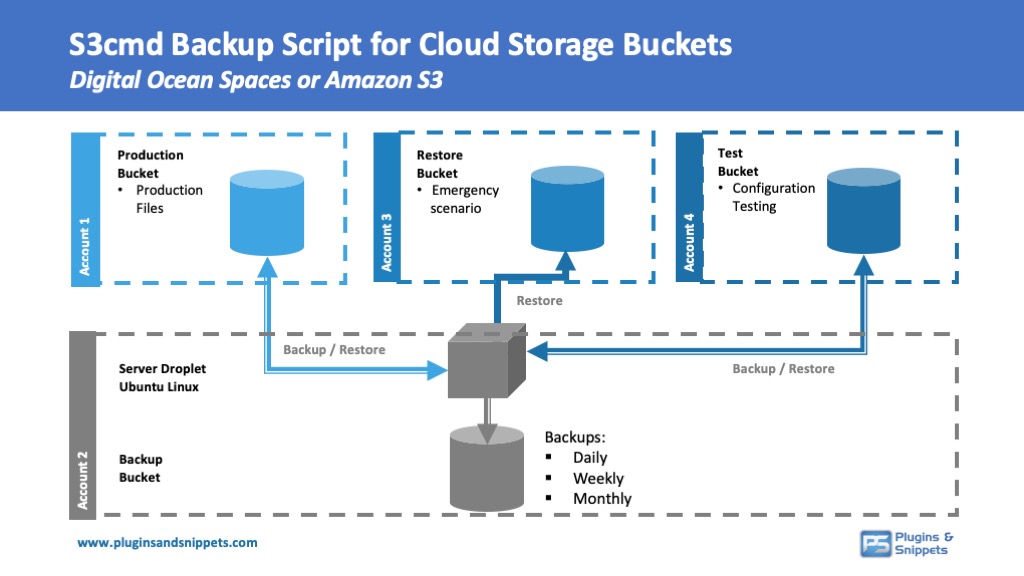

The S3cmd manages daily backups between different Cloud Storage Accounts such as buckets in DigitalOcean Spaces or Amazon S3 using the s3cmd library. It can be installed on any Linux server via Secure Socket Shell (SSH) and will connect up Production and Backup Buckets on two different Cloud Storage Accounts.

The S3cmd bucket-backup script is best installed in the /root folder of your Linux Server (we recommend Ubuntu), and it will manage mainly the following:

- Connecting separate Cloud Storage Accounts for Backup, Production, Restore and Test Buckets

- Backup to a different Cloud Storage Account (from Production/Test Bucket to Backup Bucket)

- Automatic Daily, Weekly, and Monthly Backups

- Lifecycle Management for Backups

- Restore Process (from Backup Bucket to

- Production/Restore/Test Bucket)

- Modus: Production or Test

Buckets

The script can connect up to four buckets, each on a different Cloud Storage Account. Here an explanation of the purpose of each bucket:

- Backup bucket: Contains all Backup Files. For security reasons it is better to keep the Backup bucket on a different account

(e.g., a separate DigitalOcean Spaces account) - Production bucket: Contains the files from a production server that are offloaded to a Cloud Storage bucket making it the Production bucket.

The Production bucket is the bucket requiring to be backed up.

- Restore bucket: If the Production bucket is compromised you might decide to use a different bucket ot restore your files.

This will be the Restore bucket which you can quickly configure via the main menu and then execute a restore. After the restore is done,

the Restore bucket can be set as the new Production bucket. - Test bucket: When you like to set up your backup process, it might be a good idea first to test the backup process.

Therefore, the bucket-backup script allows you to run the backup process in a Test Modus. For this, we need a Test bucket from which Backups are taken. You can easily switch from Test to Production modus later on.

How does it work?

* Restore only

Production/Test Modus determines if backup is taken from Production or Test bucket

The way this script works is that all files from the Production/Test bucket (as per selected Modus) are regularly backed up via the s3cmd library (which also needs to be installed on the server droplet). The bucket-backup script is located on a Linux Server, preferably an independent and secured Server Droplet.

The cronjobs set by the S3 cmd Bucket Backup script, trigger Daily, Weekly, Monthly backups and the menu offers ad hoc restores by selecting any backup. Each backup is a fresh full backup. All files are copied to the Server Droplet and then uploaded to the Backup bucket. This process allows using separate accounts in DigitalOcean Spaces or Amazon S3.

Prerequisites

In order to use this script, you need the following:

- Backup bucket (e.g., Account 1 in DigitalOcean Spaces)

- Production bucket (e.g., Account 2 in Digital Ocean Spaces)

- Linux Server Droplet to host this bucket-backup script (e.g., on Account 1 in DigitalOcean)

- Ubuntu (recommended)

- S3cmd Library (see https://s3tools.org/s3cmd) – can be installed via bucket-backup script

Installation

The S3cmd bucket-backup script needs to be uploaded via FTP or SSH on your Linux Server. You can install the folder in /root or on any other location.

To upload the script via SSH, the following:

1. Unzip the script on your local computer so that the folder /bucket-backup/ will show and all the files included

(e.g. for Mac use this location: /Users/username

3. Upload the zip file of the S3cmd bucket-backup script via scp command (e.g., upload script folder from Mac via SSH: “scp /Users/username/bucket-backup.zip user@ipaddress:/root “)

4. Make sure the Unzip library is installed on your server – SSHH: apt install unzip

5. Unzip the file on the server: SSH: unzip bucket-backup.zip so that it creates a directory “/bucket-backup/”

6. Review and enter the installed folder /bucket-backup/ via SSH: “cd /root/bucket-backup/”)

7. Execute the menu.sh file via SSH: “sh menu.sh” command.

Main Menu – S3cmd Bucket Backup Script

The S3cmd Bucket Backupscript can be managed via SSH: “sh menu.sh” command, which will open the following menu:

Directory: /root/bucket-backup

Backup Modus: PRODUCTION

Backup Bucket: backupbucket

Production Bucket: productionbucket

Restore Bucket: restorebucket

Test Bucket: testbucket

Quick Actions:

(01) Create Backup (productionbucket => backupbucket)

(02) Restore to PRODUCTION Bucket (backupbucket => productionbucket)

(03) Restore to RESTORE Bucket (backupbucket => restorebucket)

(04) Restore to TEST Bucket (backupbucket => testbucket)

Backup Bucket:

(05) List Buckets in Backup Account

(06) List Backups in Backup Bucket backupbucket

Configuration and Setup:

(07) Change Modus: Test/Live (Current Modus: production)

(08) Set Backup Period (Lifecycle Policy)

(09) Set API Settings

(10) Check API Connections

(11) Make Restore New Production Bucket

(12) Update s3cmd Library

(13) Set Folders Daily/Weekly/Monthly

(14) Set Cronjobs

(15) Set Timezone

(16) Closing Ports

(17) Quick Setup

Documentation

(18) View READ.md

…

Please select 1-18 or E to Exit this Menu:

Now you can simply enter any menu number from 1-18 or “E” for Exit. The commands will execute the other scripts included in the S3cmd

bucket-backup script simply by quickly selecting the menu number.

If you use the menu the first time, it is recommended to select Menu 17, which walks you through a quick setup of the bucket-backup script.

Here the explanation of the Menus.

(1) Create Backup

Selecting menu 1 will execute an immediate full backup, either from the Production bucket (Production Modus) or from the Test Server (Test Modus).

(02) Restore to PRODUCTION Bucket

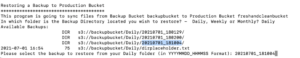

Menu 2 will execute a restore from the Backup bucket to the Production bucket. The script will ask to select which backup to restore.

(03) Restore to RESTORE Bucket

Menu 3 will execute a restore from the Backup bucket to a Restore buckets. Same as the restore to the production bucket, the script will ask to select

which backup to restore. Restoring to a new Restore bucket will allow a fresh start in case of any problems with the Production bucket.

See also menu 11, which will set the Restore bucket as the new Production bucket.

(04) Restore to TEST Bucket

Menu 4 will execute a restore from the Backup bucket to the Test buckets. Same as the restore to the production bucket, the script will ask to select

which backup to restore.

(05) List Buckets in Backup Account

Menu 5 provides a list of all buckets in the Backup account.

(06) List Backups in Backup Bucket

Menu 6 lists the folder structure in the Backup bucket. This allows seeing how many backups are available in the Daily, Weekly, and Monthly Folders.

Also, you can see the date/time of each backup available.

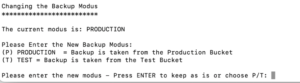

(07) Change Modus: Production/Test

This menu offers a selector to switch between Production and Test modus. In the Production modus, backups will be taken from the Production bucket.

In Test Modus, the backup will be taken from the Test bucket. This allows us first to test the bucket-backup script and verify that everything works as it should.

(08) Set Backup Period (Lifecycle Policy)

This menu defines for how long Daily, Weekly and Monthly Backups will be archived before being deleted from the backup bucket.

Please note, the number of days defines the size of the Backup bucket. If you e.g., keep daily backups for 30 days, weekly backups for 8 weeks and monthly backups for 6 months; this means for every production file you keep 44 backups. If your production bucket has 20 GB, you will need 1 TB of storage space in the bucket Backup folder. Therefore, feel free to adjust the Backup Periods as per your preferences.

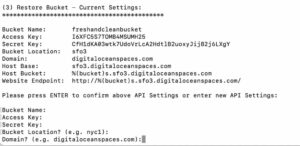

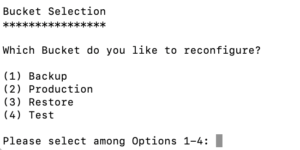

(09) Set API Settings

Triggers a menu to configure the API to access each bucket (Backup, Production, Restore or Test bucket). The menu uses a pre-configured standard .3scfg template, and the menu only asks for 5 inputs such as the bucket name, access key, secret key, region. and domain. These five variables will then be used to update the .s3csfg configuration files for each bucket.

(10) Check API Connections

Will execute a quick check to access all four of the buckets to find out if their contents can be accessed and the API connections are working or not. If you don’t see any bucket content, go to menu (9) to reset the API keys.

(11) Make Restore New Production Bucket

This menu should only be used when you have to do a restore to a Restore bucket instead of the Production bucket.

(12) Update s3cmd Library

Re-installs and updates the s3cmd library, which is required for the functioning of the bucket Backup script. The menu will execute the following SSH commands:

sudo apt-get update

sudo apt-get install s3cmd

See also

https://s3tools.org/s3cmd

https://github.com/s3tools/s3cmd

https://docs.digitalocean.com/products/spaces/resources/s3cmd/

(13) Set Folders Daily/Weekly/Monthly

This script uploads a text info file so that Pro-forma folders Daily, Weekly and Monthly can be created in the Backup bucket. Folders do not exist for Cloud Storage buckets such as DigitalOcean Spaces or S3. They are shown as part of a user interface to make it easy for users to understand the file structure. The bucket backup script does the same to make it clear which type of backups are available.

(14) Set Cronjobs

This menu creates the cronjobs, which trigger Daily, Weekly, and Monthly Backups. Per default, the following settings are used:

- Daily Backup: Executed at 5am local time every day

- Weekly Backup: Executed at 11am local time every Sunday

- Monthly Backup: Executed at 9am local time every 1st of the month

(15) Set Timezone

This menu offers a selection of 24 geographic locations, which can be used to change your server’s timezone quickly.

(16) Closing Ports

This menu closes all ports on the server except the SSH port. This is an essential security feature recommended to use when running the server as a standalone droplet. However, please be aware that additional security measures are needed to protect yourself fully, and it is an ongoing task to maintain the security of your server.

(17) Quick Setup

The quick setup menu walks you through all the menus above, which are essential to set up the bucket Backup solution on a Linux server.

Screenshots

Frequently Asked Questions

Where do I best install the S3cmd bucket backup script?

We recommend installing the backup script on an independent server droplet, e.g., a Server Droplet with Linux Ubuntu installed from DigitalOcean. However, you can install the script on any Linux server in principle as it is working in Bash via SSH.

Do the two cloud storage buckets, Production and Backup bucket, need to be located in the same region (datacenter)?

No, they can be at a completely different location; however, it’s best to choose the same region if you like to have a fast backup (datacenter).

How much disk space will this bucket backup solution require?

Server Droplet: The server droplet will host the script and temporary files when doing a backup/restore. The script takes next to no space but the server Droplet needs to have a minimum storage size as the Production Bucket. We recommend some safety margin, so depending on your growth factor 2.0x – 10.0x the size of your Production bucket.

Backup Bucket: The required disk space depends heavily on how many backups you keep in the backup bucket. If, e.g., you keep daily backups for 30 days, weekly backups for eight weeks, and monthly backups for six months, this means for every production, 44 backups exist. If your production bucket has 20 GB, you will need 1 TB of storage space in the bucket Backup folder. Therefore, it is essential to choose an archiving strategy that optimizes your objectives and the storage space.

Can you also do incremental backups?

Not at the moment. Although the script uses sync commands to synchronize two buckets, full backups are taken every time a new folder is created. This backup offers a premium solution for users who need accurate and complete backups per a selected point in time. We might think about adding an incremental backup solution later in time, depending on customer feedback received.

Is Installation Support included?

The price does not include installation support. If you want, we can set up the script for you, but we will charge an extra fee. Contact us if you are interested.

Why are the backups not zipped?

The goal was to create a high-quality backup solution using multiple Cloud Storage accounts. For this reason, we wanted to offer backup folders which you can easily access and find out quickly if a file is there or not. If you have to unzip the folder first, it takes additional time to find out what the backup exactly contains. The additional time you usually won’t have in a restore scenario, which is an emergency.

With which Cloud storage Service providers do the S3cmd Bucket Backup script work?

The script will work with any cloud storage provider using the S3cmd library such as Amazon S3 and DigitalOcean Spaces. We believe it will work with other cloud storage providers as well.

Does restore backup delete files on my Production bucket?

No. We use the sync command in the s3cmd library to sync two buckets. No files are deleted. Only the missing files are being transferred.

Can I take more than one Daily Backup?

Yes, you can. In this case, you would need to duplicate the daily cronjob placed in the crontab and trigger additional backups at specified hours. However, please keep in mind, this will multiply the required storage space significantly.

What happens when my license expire? Will the script still work?

Yes, the script will still work but you will not receive further updates or product support.

Support

For any questions or comments, please feel free to visit the product page or contact us via email at [email protected].